Perhaps we do not share the same definition of "critical"?

I ran a new-ish security scanning tool against a code repository today. Doesn't really matter which tool. Doesn't really matter which repository.

The experience felt strangely familiar:

-

It took about 5-10 minutes to run.

-

Twenty-one issues. Every single one rated "critical".

-

It took about 5-10 minutes to triage the issues.

-

Twenty-one issues. Every single one a false positive.

This feels familiar because it is familiar. Security y'all, why do we do this to ourselves?

Application codebases are generally complex and multi-facted. The environments that applications are deployed into are always complex and multi-faceted! Security tools are (often? occasionally?) reporting a possibility rather than a certainty and, as we all know, prone to false positives. Vulnerability severity ratings should be nuanced and subjective.

Vulnerability management teams, remember - security tooling isn't perfect, and just because a tool you ran reported a "critical" severity issue doesn't mean that is accurate for your codebase or your context.

More importantly, security tool authors / vendors - go run your product against an arbitrary new codebase, and think critically about how it is rating the issues that it flags. Do you need to tone down some of the "critical"s? Even better, can you communicate the level of certainty that the tool & specific test might be able to infer about an issue it is reporting, rather than assigning it what is often an unrealistic worst-case severity?

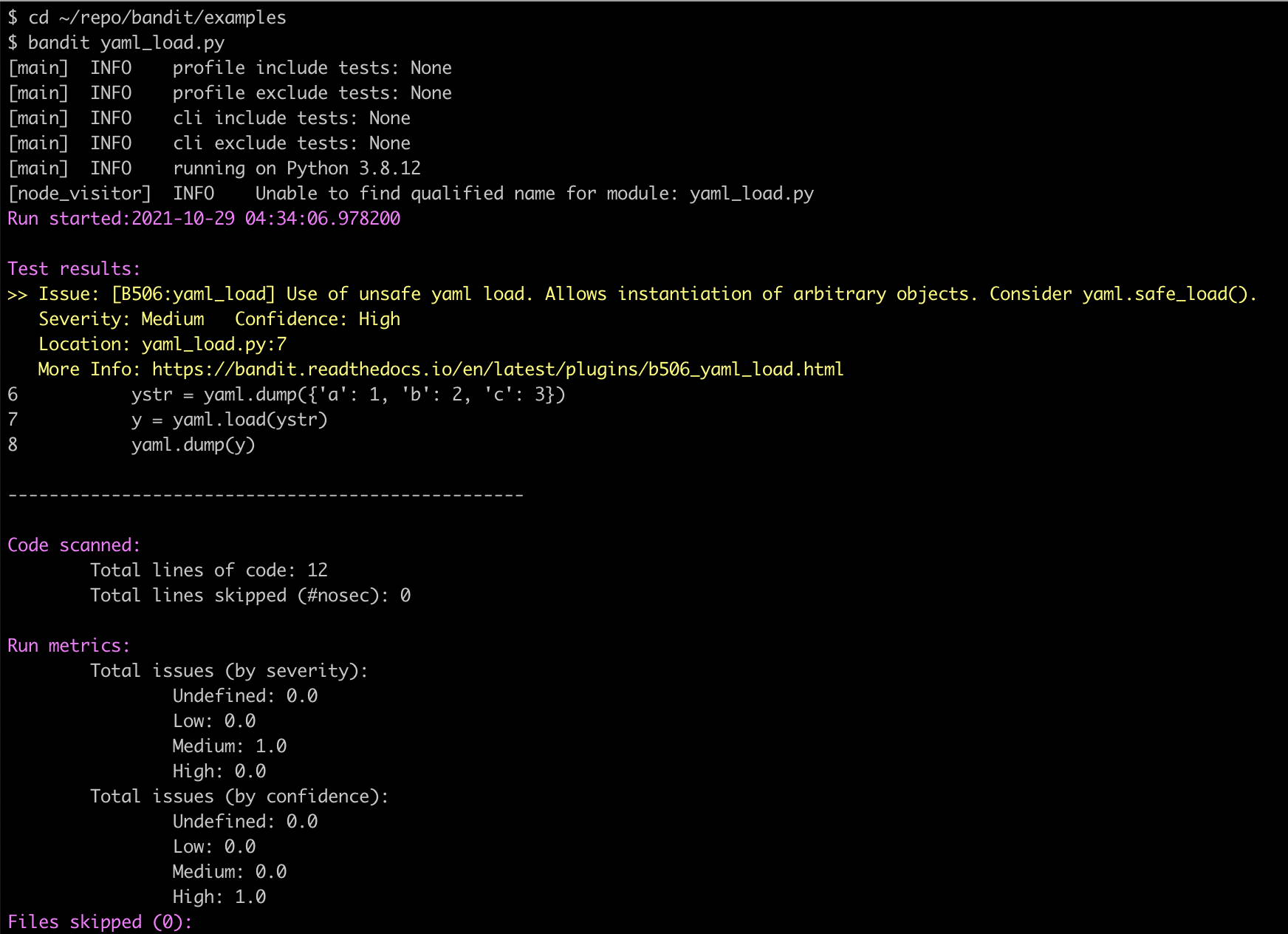

We did this to some degree with Bandit (Ooo, here's the merge where this was introduced... 2015, how time flies.) When it reports an issue, Bandit provides a high/medium/low/undefined severity rating but it also provides a high/medium/low/undefined confidence rating. At the time, we said confidence "is intended to allow a plugin writer to indicate their level of confidence that the issue raised is truly a security problem." Not perfect, but it helped.

Don't get me started on CVSS...